In 2015, together with Noel Cressie, I started looking at the problem of estimating sources and sinks of greenhouse gases such as methane and carbon dioxide using ideas from spatial and spatio-temporal statistics. We quickly realised that this is an extremely difficult and inter-disciplinary problem, which requires a team with expertise in high-performance computing, atmospheric chemistry, and statistics.

In the early years (2015/2016) Noel and I did some in-roads in developing multivariate statistical models for use in regional flux inversion, drawing on expertise from the atmospheric chemists at my former host institution, the University of Bristol (Matt Rigby, Anita Ganesan, and Ann Stavert), and published two papers on this, one in the journal Chemometrics and Intelligent Laboratory Systems and another in the journal Spatial Statistics. Following this, we started looking into global inversions from OCO-2 (a NASA satellite) data. Progress was slow initially, but in 2018 Noel and I were successful in acquiring Australian Research Council grant money with which we managed to recruit Michael Bertolacci, a research fellow with the perfect skill set and inter-disciplinary mindset, and this is when the project really took off. Two years later, we are participants in the next global OCO-2 model intercomparison project (MIP) and contributors to a COP26 Global Carbon Stocktake product. Our final flux inversion system is called WOMBAT, which is short for the WOllongong Methodology for Bayesian Assimilation of Trace Gases, and a manuscript describing it has now been accepted for publication with Geophys ical Model Development. Other essential contributors to WOMBAT development include Jenny Fisher and Yi Cao, and we have had help from many others too as we acknowledge in the paper.

The Model

At the core of WOMBAT is a Bayesian hierarchical model (BHM) which establishes a flux process model, (i.e., a model for the sources and sinks), a mole-fraction process model (i.e., a model for the spatio-temporal evolving atmospheric constituent), and a data model, which characterises the relationship between the mole-fraction field and the data that are ultimately used to infer the fluxes. A summary of the BHM is given below:

Each of these models is very simple to write down mathematically, but involves quite a lot of work to implement correctly. First, note from the above schematic that the flux process model involves a set of flux basis functions: these need to be extracted/constructed from several published inventories. We use inventories that describe ocean fluxes, fires, biospheric fluxes, and several others, so that the basis functions have spatio-temporal patterns that are reasonably similar to what could be expected from the underlying flux.

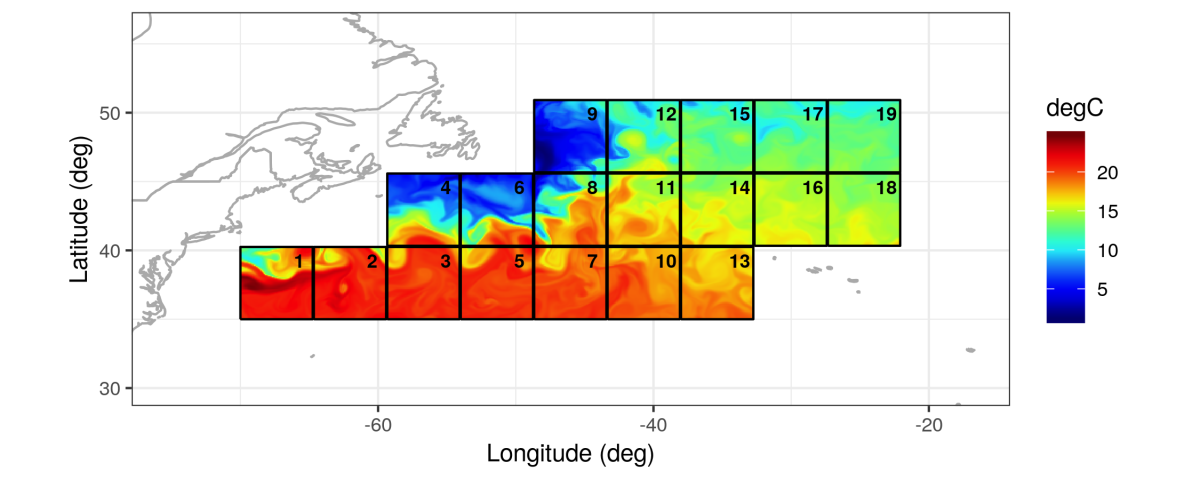

Second, the mole-fraction process model involves running a chemical-transport (numerical) model. The chemical-transport model we used is GEOS-Chem, which is a global 3-D model that can simulate the transport and chemical reactions of gases when supplied with meteorological data as input. Running chemical-transport models is nerve-wracking as they have a lot of settings and input parameters: a small mistake can result in a lot of wasted computing time or, even worse, go undetected. The chemical-transport model is needed to see what the effect of the CO₂ fluxes is on the atmospheric mole fraction, which is ultimately what the instruments are measuring. Of course we are relying on the model and the met fields we use as being ”good enough,” but we also account for some of the errors they introduce through v2 in the above schematic. In WOMBAT we acknowledge that these errors may be correlated in space and time, and employ a simple spatio-temporal covariance model to represent the correlation. This correlation has the effect of considerably increasing our uncertainty on the estimated fluxes (i.e., ignoring the correlation can lead to over-confident estimates).

Finally, the data themselves are highly heterogeneous. In this paper we focus on OCO-2 data, which are available to us as column-retrievals (i.e., the average dry air mole fraction of carbon dioxide in the atmosphere in a sometimes vertical, often skewed, column from the satellite to the surface of Earth). These data are actually the product of a retrieval algorithm which is itself an inversion algorithm, and are biased and have errors of their own. We account for all of this in the data model, which contains many parameters that need to be estimated. We use Markov chain Monte Carlo (Metropolis-within-Gibbs) to estimate all the parameters in our model.

Findings

So, what do we find out? First, from the methodological perspective we find that if biases are indeed present in the data, and that if correlations in the errors are not taken into account when they are really there, that flux estimates can be badly affected. Several inversion systems currently in place do not take these into account, largely because data are already largely de-biased prior to being used in an inversion, but primarily because taking into account correlations is computationally difficult. We also show that estimating biases while doing the inversion is possible, and that offline de-biasing can be complemented in this way. Second, from a science point of view, we show that our flux estimates are largely in line with those from other inversion systems and that (unsurprisingly!) carbon dioxide injection in the atmosphere is very high, with humans to blame for this. Below, we show our estimates of total carbon dioxide surface flux, split by land and ocean, excluding fossil fuel emissions, using data from a specific satellite mode (Land Glint [LG]). We also show the flux estimates from the first OCO-2 model intercomparison project (MIP), which largely agree with our estimates. Note how Earth absorbs about 4 PgC (i.e., petagrams of Carbon) per year on average. Unfortunately, humans are emitting on the order of 10 PgC per year of CO2 into the atmosphere. This big discrepancy in flux (approximately 6 PgC per year) means that carbon dioxide levels in the atmosphere will only keep on increasing, and have a deleterious effects on the climate we have learnt to live with. With WOMBAT, as with most flux inversion systems, we can also zoom in to see where and when most carbon dioxide is being emitted or absorbed. This will become a main focus of ours moving forward when preparing our submission to the COP26 Global Carbon Stocktake product.

We are currently implementing several exciting upgrades to WOMBAT. For more information about the project, please do not hesitate to get in touch!